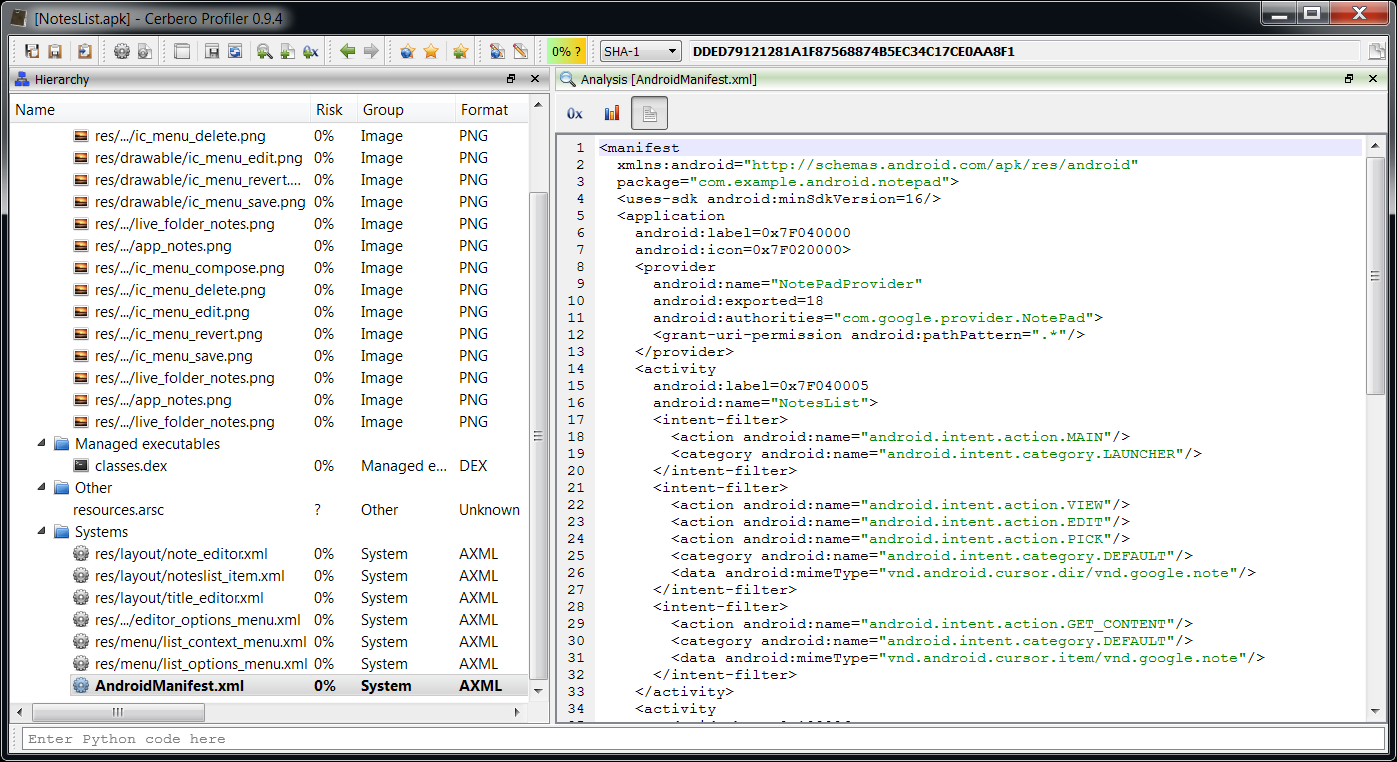

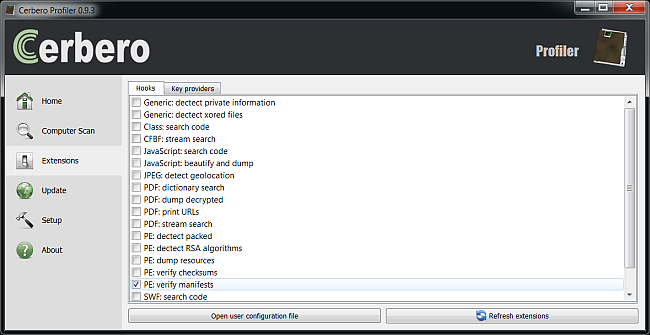

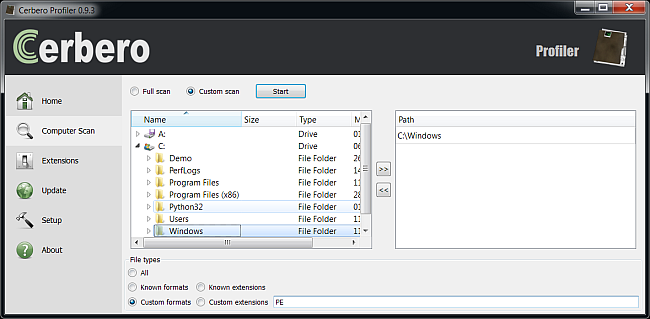

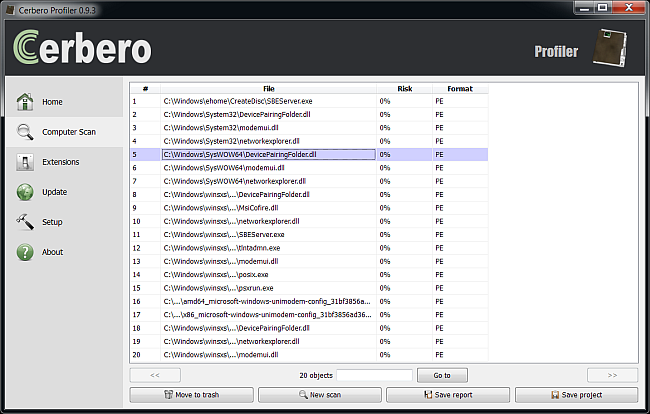

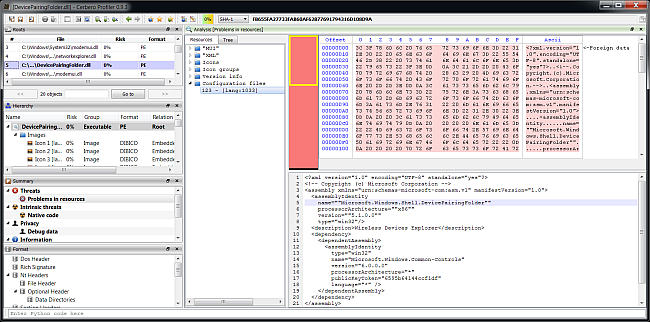

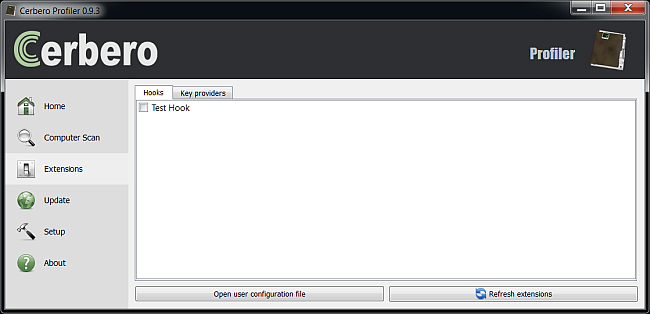

Some weeks (? I don’t even remember the time frame) ago I was made aware by a friend of this challenge. Basically injection of Dalvik code through native code. I found some minutes this morning to look into it and while I’m sure somebody else has already solved it, it’s a nice way of showing a bit of how to reverse engineer Android applications with the Profiler.

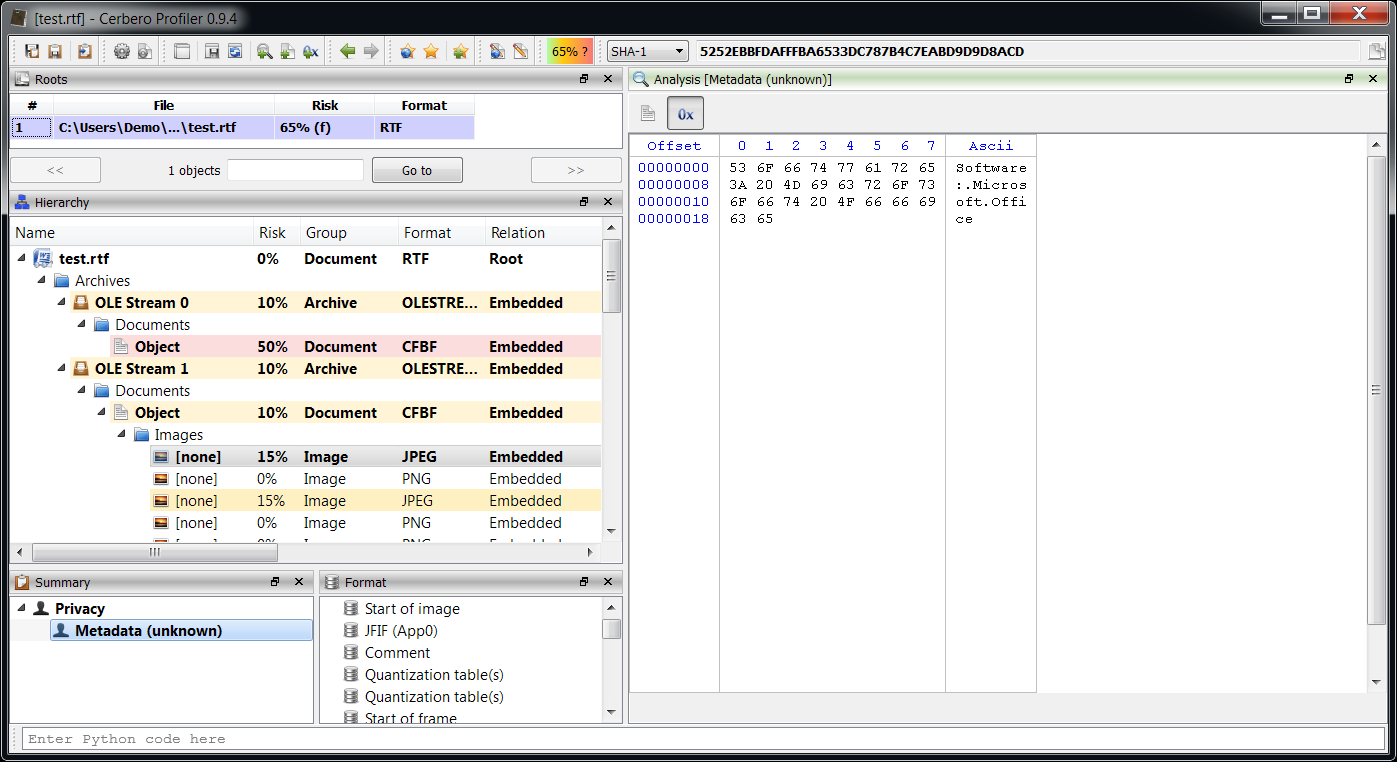

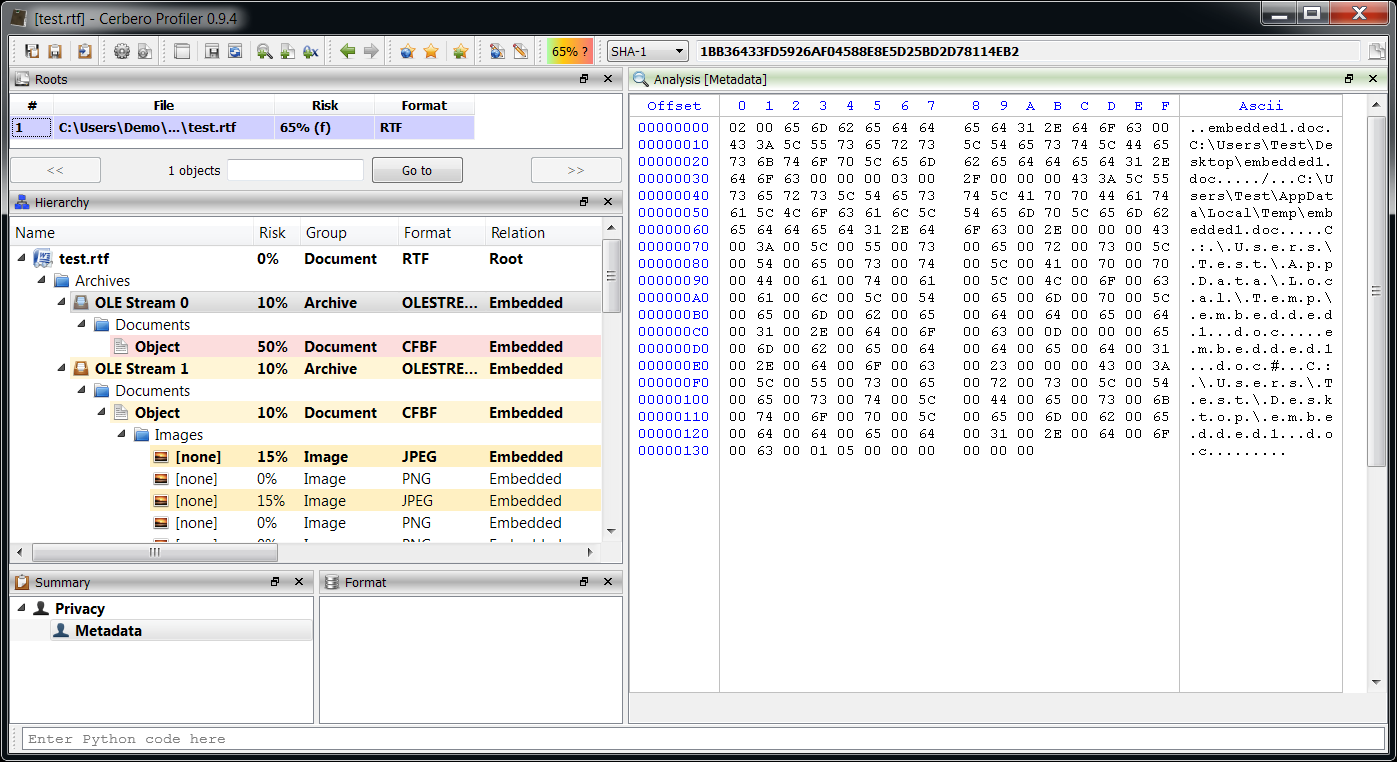

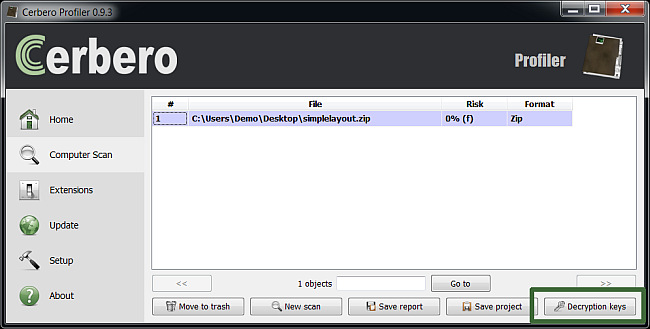

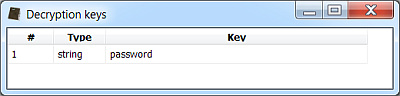

The first problem we encounter is that the APK (which is a Zip archive) has been tampered with. It asks incorrectly for a decryption key because all file headers have had their GeneralPurposeBit modifed, e.g.:

; file header

; offset: 28BB2

Signature : 02014B50

CreatorVersion : 0014

ExtractorVersion : 0014

GeneralBitFlag : 0809 <-- should be 0

CompressionMethod : 0008

LastModTime : 2899

LastModDate : 4262

Crc32 : CFEF1C2F

CompressedSize : 000000F7

UncompressedSize : 000001D0

FileNameLength : 001C

ExtraFieldLength : 0000

FileCommentLength : 0000

Disk : 0000

InternalFileAttributes: 0000

ExternalFileAttributes: 00000000

LocalHeaderOffset : 00000C15

FileName : res/menu/activity_action.xml

ExtraField :

FileComment : A few lines of code to fix this field for all file entries in the Zip archive:

from Pro.UI import *

obj = proContext().currentScanProvider().getObject()

n = obj.GetEntryCount()

for i in range(n):

obj.GetEntry(i).Set("GeneralBitFlag", 0)

s = obj.GetStream()

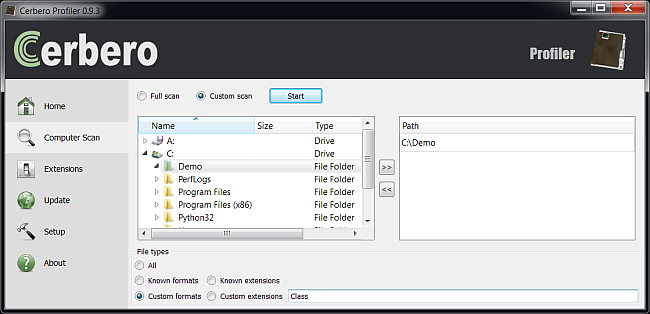

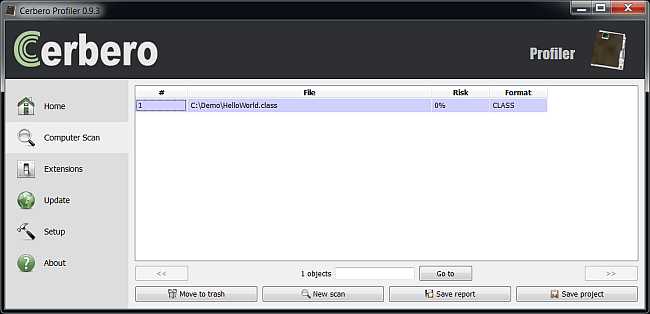

s.save(s.name() + "_fixed") Now we can explore the contents of the APK with the Profiler. We have the usual 'classes.dex' file plus the native library 'lib/armeabi/libnet.so'. Let's open the library with IDA. You'll notice the functions it contains aren't many and just by looking at them we'll stumble at this function:

void *__fastcall search(unsigned int a1)

{

unsigned int v1; // r4@1

__int32 v2; // r7@1

int v3; // r4@1

int v4; // r5@2

signed int v5; // r4@3

signed int v6; // r8@3

int v7; // r4@3

int v8; // r6@3

int v9; // r0@3

int v10; // r0@3

void *v11; // r4@3

v1 = a1;

v2 = sysconf(39);

v3 = v1 - v1 % v2;

do

{

v3 -= v2;

v4 = v3 + 40;

}

while ( !findmagic(v3 + 40) );

v5 = getStrIdx(v3 + 40, "L-ÿava/lang/String;", 0x12u);

v6 = getStrIdx(v4, "add", 3u);

v7 = getTypeIdx(v4, v5);

v8 = getClassItem(v4, v7);

v9 = getMethodIdx(v4, v6, v7);

v10 = getCodeItem(v4, v8, v9);

v11 = (void *)(v10 + 16);

mprotect((void *)(v10 - (v10 + 16) % (unsigned int)v2 + 16), v2, 3);

return memcpy(v11, inject, 0xDEu);

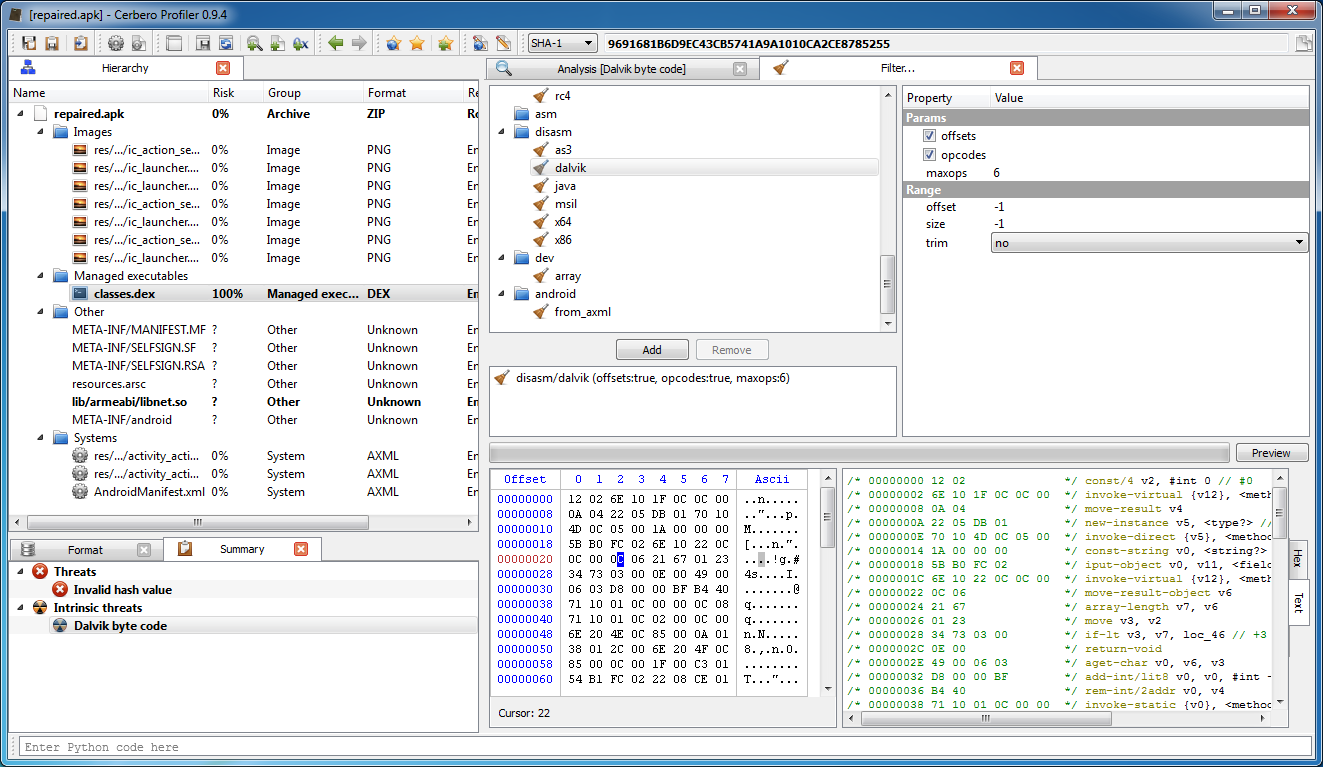

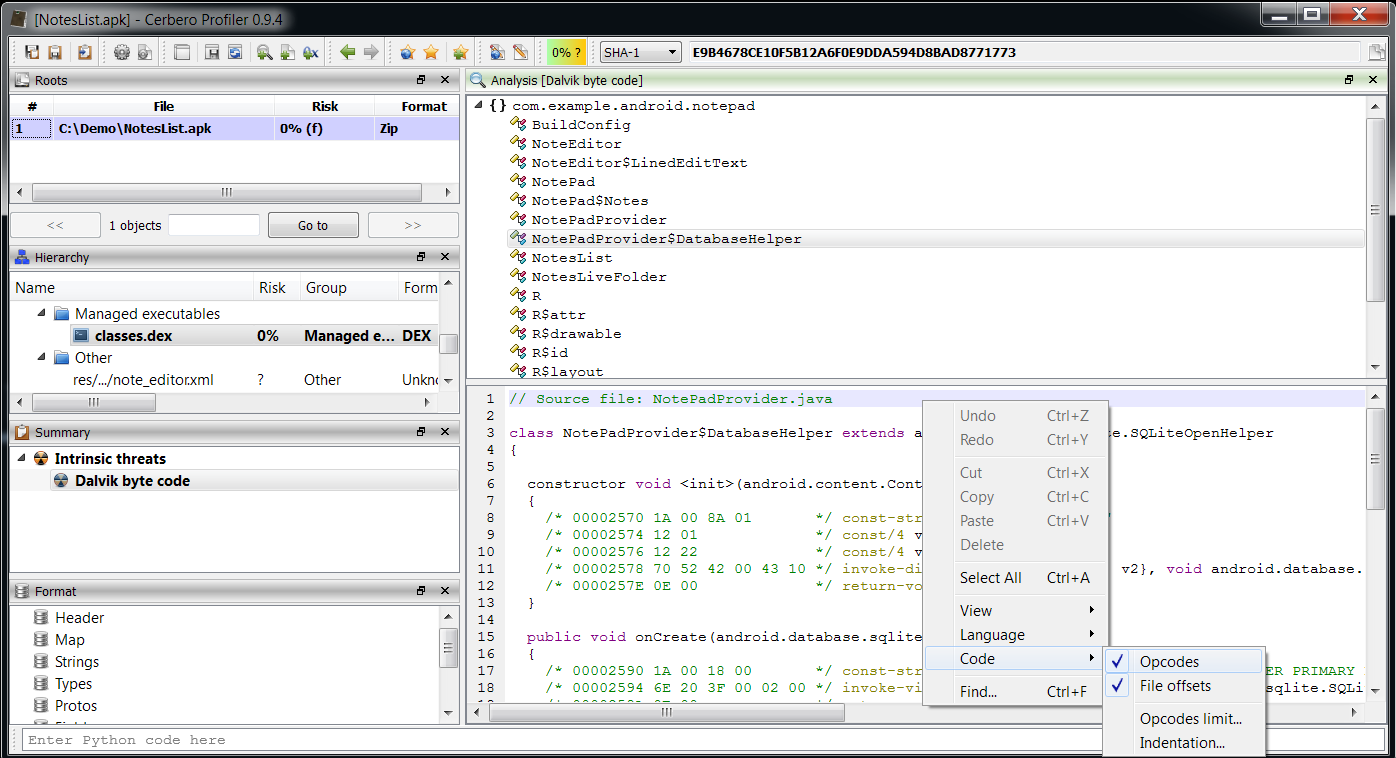

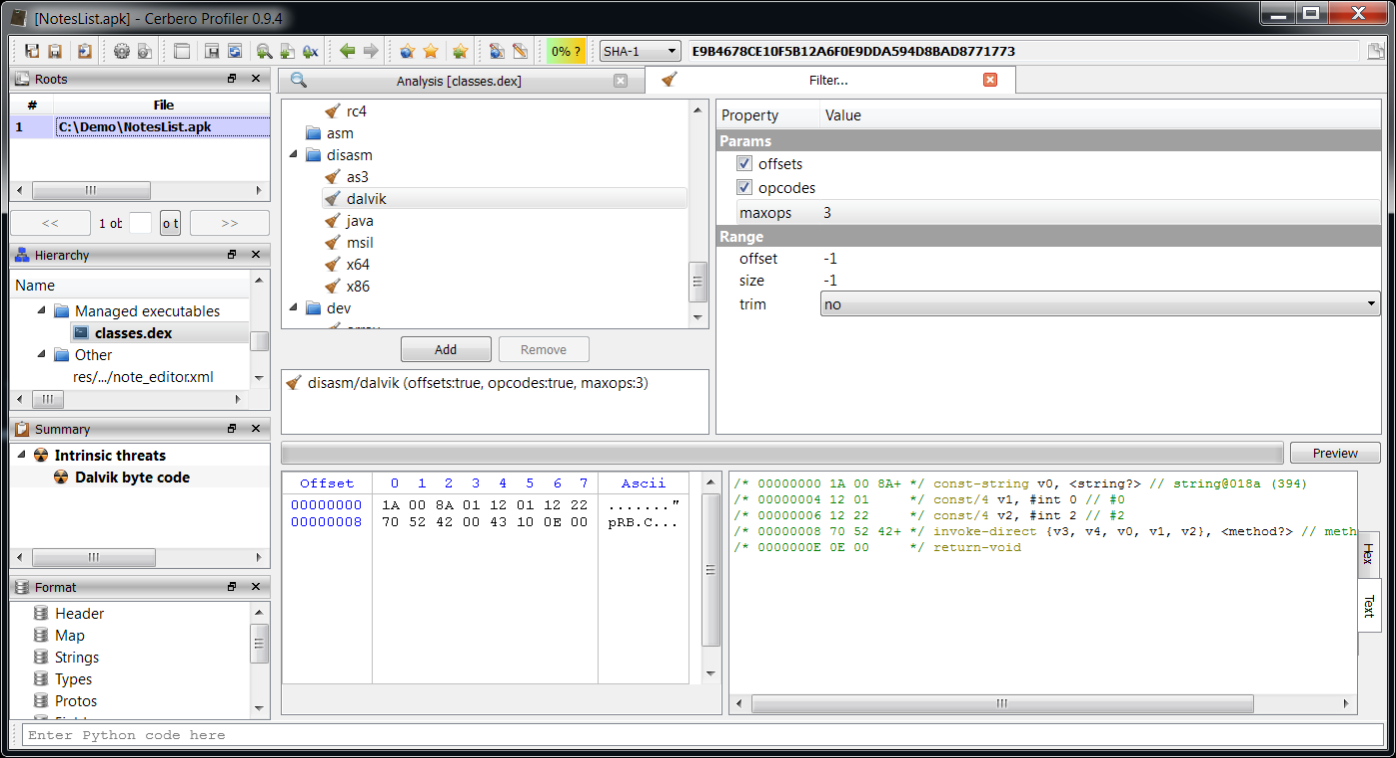

} It's clear that at some point during the execution of the Dalvik code this function is triggered which writes the array 'inject' into the memory space of the DEX module. We can verify that they are indeed Dalvik opcodes with the appropriate filter. Select the bytes representing the array in the hex view and then open the filter view:

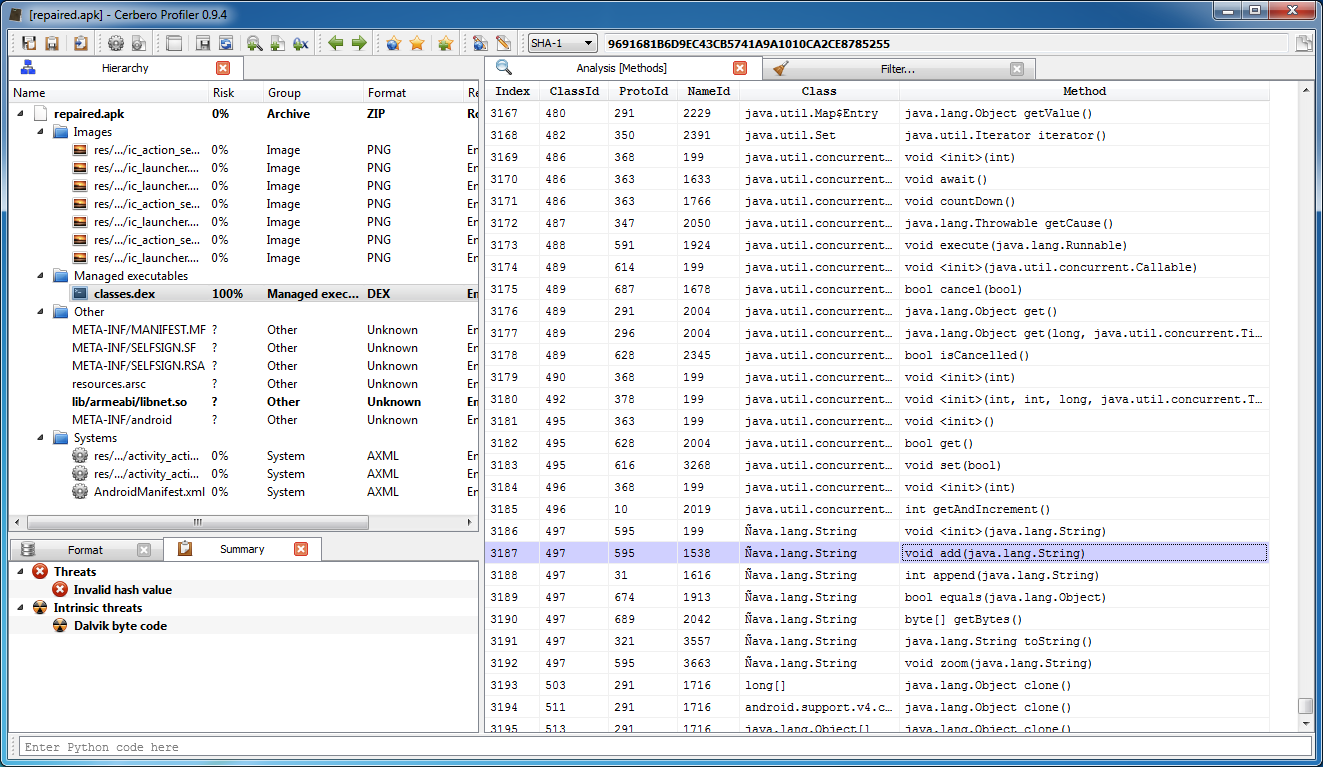

The functions called before the actual injection locate the exact position of the code. They help us as well: back to the Profiler, let's find the method "L-ÿava/lang/String;":"add":

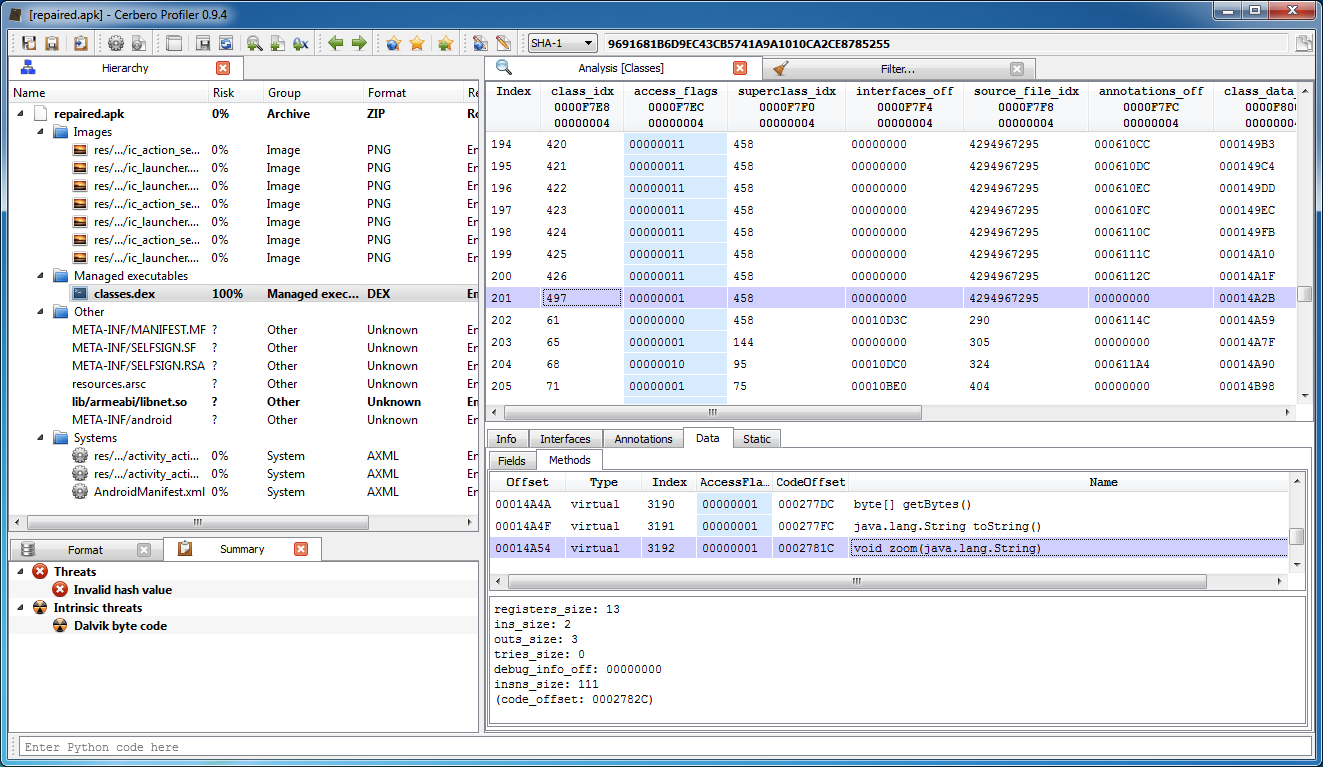

From here we get the class index and name. Just by looking at the disassembled class we'll notice a method filled with nops:

The code size of the method matches the payload size (111 * 2 = 0xDE):

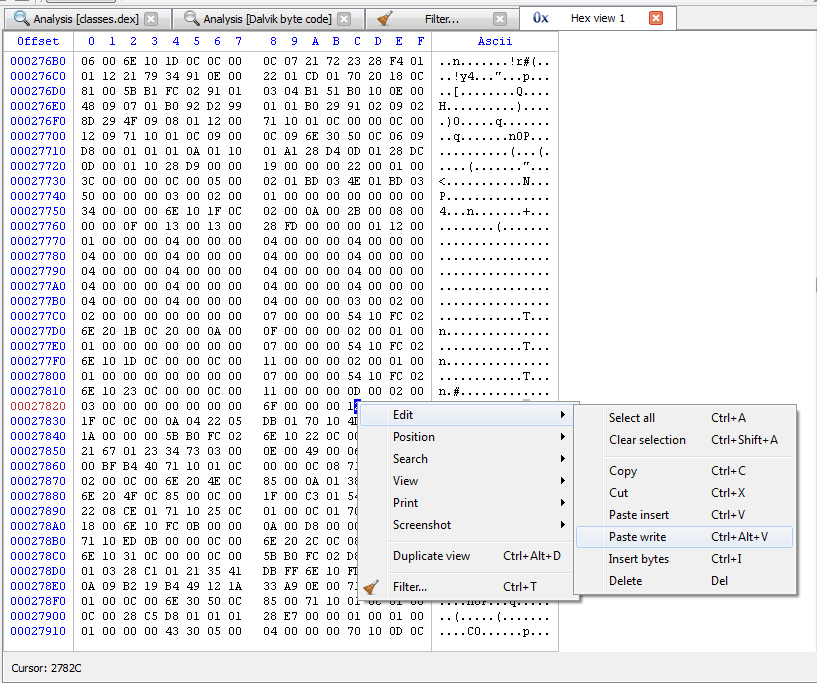

Let's write back the instructions to the DEX module:

We could do this with a filter just as well by the way:

And now we can analyze the injected code:

public void zoom(java.lang.String)

{

/* 0002782C 12 02 */ const/4 v2, #int 0 // #0

/* 0002782E 6E 10 1F 0C 0C 00 */ invoke-virtual {v12}, int java.lang.String.length()

/* 00027834 0A 04 */ move-result v4

/* 00027836 22 05 DB 01 */ new-instance v5, java.util.HashMap

/* 0002783A 70 10 4D 0C 05 00 */ invoke-direct {v5}, void java.util.HashMap.()

/* 00027840 1A 00 00 00 */ const-string v0, ""

/* 00027844 5B B0 FC 02 */ iput-object v0, v11, java.lang.String content

/* 00027848 6E 10 22 0C 0C 00 */ invoke-virtual {v12}, char[] java.lang.String.toCharArray()

/* 0002784E 0C 06 */ move-result-object v6

/* 00027850 21 67 */ array-length v7, v6

/* 00027852 01 23 */ move v3, v2

loc_40:

/* 00027854 34 73 03 00 */ if-lt v3, v7, loc_46 // +3

/* 00027858 0E 00 */ return-void

loc_46:

/* 0002785A 49 00 06 03 */ aget-char v0, v6, v3

/* 0002785E D8 00 00 BF */ add-int/lit8 v0, v0, #int -65 // #bf

/* 00027862 B4 40 */ rem-int/2addr v0, v4

/* 00027864 71 10 01 0C 00 00 */ invoke-static {v0}, java.lang.Integer java.lang.Integer.valueOf(int)

/* 0002786A 0C 08 */ move-result-object v8

/* 0002786C 71 10 01 0C 02 00 */ invoke-static {v2}, java.lang.Integer java.lang.Integer.valueOf(int)

/* 00027872 0C 00 */ move-result-object v0

/* 00027874 6E 20 4E 0C 85 00 */ invoke-virtual {v5, v8}, bool java.util.HashMap.containsKey(java.lang.Object)

/* 0002787A 0A 01 */ move-result v1

/* 0002787C 38 01 2C 00 */ if-eqz v1, loc_168 // +44

/* 00027880 6E 20 4F 0C 85 00 */ invoke-virtual {v5, v8}, java.lang.Object java.util.HashMap.get(java.lang.Object)

/* 00027886 0C 00 */ move-result-object v0

/* 00027888 1F 00 C3 01 */ check-cast v0, java.lang.Integer

loc_96:

/* 0002788C 54 B1 FC 02 */ iget-object v1, v11, java.lang.String content

/* 00027890 22 08 CE 01 */ new-instance v8, java.lang.StringBuilder

/* 00027894 71 10 25 0C 01 00 */ invoke-static {v1}, java.lang.String java.lang.String.valueOf(java.lang.Object)

/* 0002789A 0C 01 */ move-result-object v1

/* 0002789C 70 20 28 0C 18 00 */ invoke-direct {v8, v1}, void java.lang.StringBuilder.(java.lang.String)

/* 000278A2 6E 10 FC 0B 00 00 */ invoke-virtual {v0}, byte java.lang.Integer.byteValue()

/* 000278A8 0A 00 */ move-result v0

/* 000278AA D8 00 00 41 */ add-int/lit8 v0, v0, #int 65 // #41

/* 000278AE 8E 00 */ int-to-char v0, v0

/* 000278B0 71 10 ED 0B 00 00 */ invoke-static {v0}, java.lang.Character java.lang.Character.valueOf(char)

/* 000278B6 0C 00 */ move-result-object v0

/* 000278B8 6E 20 2C 0C 08 00 */ invoke-virtual {v8, v0}, java.lang.StringBuilder java.lang.StringBuilder.append(java.lang.Object)

/* 000278BE 0C 00 */ move-result-object v0

/* 000278C0 6E 10 31 0C 00 00 */ invoke-virtual {v0}, java.lang.String java.lang.StringBuilder.toString()

/* 000278C6 0C 00 */ move-result-object v0

/* 000278C8 5B B0 FC 02 */ iput-object v0, v11, java.lang.String content

/* 000278CC D8 00 03 01 */ add-int/lit8 v0, v3, #int 1 // #01

/* 000278D0 01 03 */ move v3, v0

/* 000278D2 28 C1 */ goto loc_40 // -63

loc_168:

/* 000278D4 01 21 */ move v1, v2

loc_170:

/* 000278D6 35 41 DB FF */ if-ge v1, v4, loc_96 // -37

/* 000278DA 6E 10 FD 0B 08 00 */ invoke-virtual {v8}, int java.lang.Integer.intValue()

/* 000278E0 0A 09 */ move-result v9

/* 000278E2 B2 19 */ mul-int/2addr v9, v1

/* 000278E4 B4 49 */ rem-int/2addr v9, v4

/* 000278E6 12 1A */ const/4 v10, #int 1 // #1

/* 000278E8 33 A9 0E 00 */ if-ne v9, v10, loc_216 // +14

/* 000278EC 71 10 01 0C 01 00 */ invoke-static {v1}, java.lang.Integer java.lang.Integer.valueOf(int)

/* 000278F2 0C 00 */ move-result-object v0

/* 000278F4 6E 30 50 0C 85 00 */ invoke-virtual {v5, v8, v0}, java.lang.Object java.util.HashMap.put(java.lang.Object, java.lang.Object)

/* 000278FA 71 10 01 0C 01 00 */ invoke-static {v1}, java.lang.Integer java.lang.Integer.valueOf(int)

/* 00027900 0C 00 */ move-result-object v0

/* 00027902 28 C5 */ goto loc_96 // -59

loc_216:

/* 00027904 D8 01 01 01 */ add-int/lit8 v1, v1, #int 1 // #01

/* 00027908 28 E7 */ goto loc_170 // -25

} And that's it. It took much more time to write the post than the rest (about 10 minutes of time if that). Reverse engineering the crackme to find the correct key is beyond the scope of the post, although I'm sure it's fun as well.

Thanks to BlueBox for the crackme!